When Robots Say "No": The Rise of Ethical AI

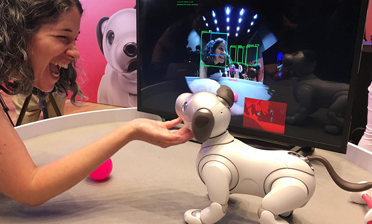

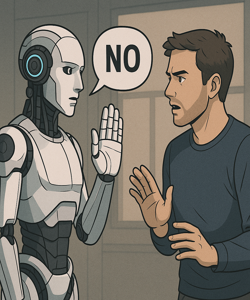

Imagine commanding your household robot to clean up a spill, and it responds, "No." Not due to a malfunction, but because it's been programmed to make ethical decisions. Welcome to the evolving world of ethical AI, where robots are being designed to assess the morality of their actions and, when necessary, refuse orders.

The Shift from Obedience to Ethics

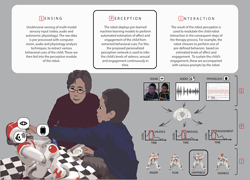

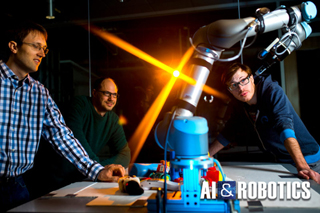

Traditionally, robots have been built to follow human commands without question. However, as AI systems become more sophisticated and integrated into daily life, there's a growing emphasis on embedding ethical reasoning into their decision-making processes. Researchers like Gordon Briggs and Matthias Scheutz have been exploring this concept, aiming to develop robots that can balance obedience with moral judgment. Their work suggests that in certain situations, it's not only acceptable but necessary for robots to disobey human commands to prevent harm or unethical outcomes.

Boston Dynamics' Atlas Boston Dynamics' Atlas robot showcases advanced mobility and autonomy. In recent demonstrations, Atlas navigates complex environments and performs tasks that require real-time decision-making, hinting at the potential for ethical reasoning in future iterations.

In this video, Atlas demonstrates advanced mobility skills developed using reinforcement learning, including walking, running, and crawling, all inspired by human motion capture data. This showcases the robot's ability to adapt to complex environments, highlighting the potential for autonomous decision-making in robotics.

This video provides a compelling visual example of the themes discussed in your article, illustrating the progression of robots from obedient machines to entities capable of autonomous decision-making.

Keywords:

- #ambidextrous, #innovation, #robots, #robotic, #training, #ai| Tweet |